Design a recruiting post for a graphic designer job opening. Encourage interested candidates to send their CV to

yourname@email.com

Design a gift certificate for a travel insurance company promoting their services with a 30% discount offer. The

certificate should convey a sense of safety and protection while travelling.

Create a series of posters for a fictional Ancient Greek Olympics, with each poster highlighting a different sport like

chariot racing or discus throw, styled in traditional Greek pottery art.

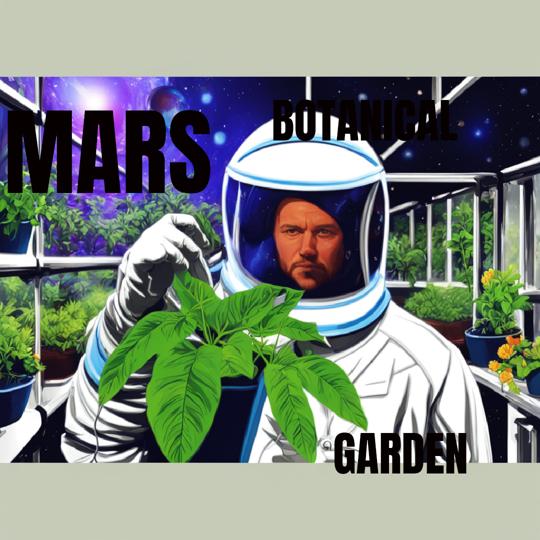

Design an attractive poster for a Mars botanical garden tour. Illustrate a greenhouse with a variety of extraterrestrial

plants. Use vibrant colors to depict the potential biodiversity on Mars.

Create an enticing poster for a classical orchestra concert in the heart of the Amazon Rainforest. (...).

Incorporate subtle hints of

wildlife and flora within the musical instruments.

Design a promotional poster for a Titanic-themed immersive theatre experience. (...).

It should also tease some of the scenes from the play, such as the grand dining hall and the ship's deck.

Create a coupon that promotes a garden design service offered by Yard Culture. The coupon is a gift voucher valued at

$49 and can only be used once. It is valid until November 15th. The coupon number is No:7496-4587.

Promote a stand-up comedy show happening on November 12th, 20xx.

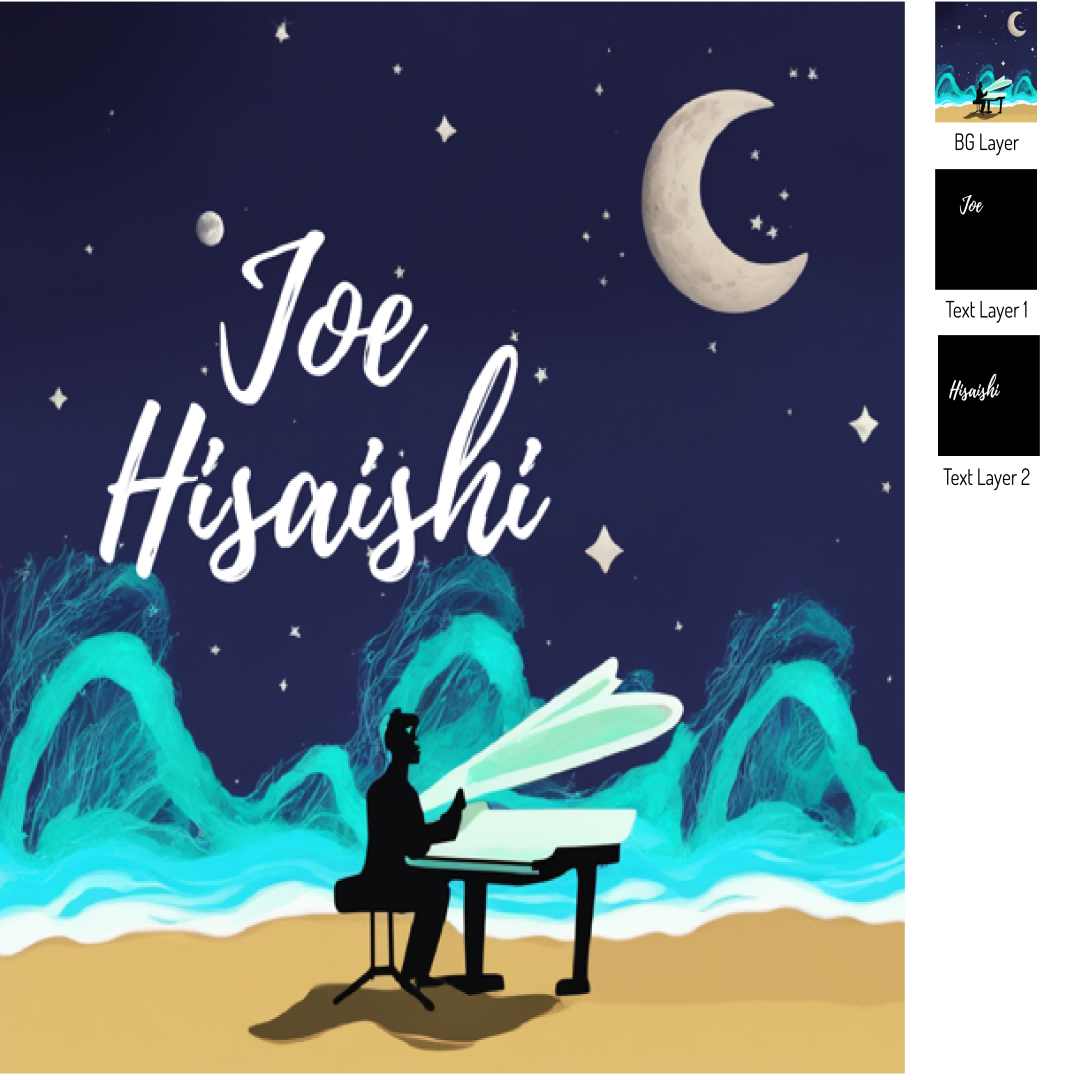

Design an animated digital poster for a fantasy concert where Joe Hisaishi performs on a grand piano on a moonlit

beach. The scene should be magical, with bioluminescent waves and a starry sky. The poster should invite viewers to

an exclusive virtual event.

Create an Instagram story promoting a special Christmas offer for a chocolate drink. The offer includes a 20%

discount and encourages customers to order now.

Create a customer testimonial advertisement showcasing a pink clothing collection. The advertisement includes a

positive review from Jenny Wilson.

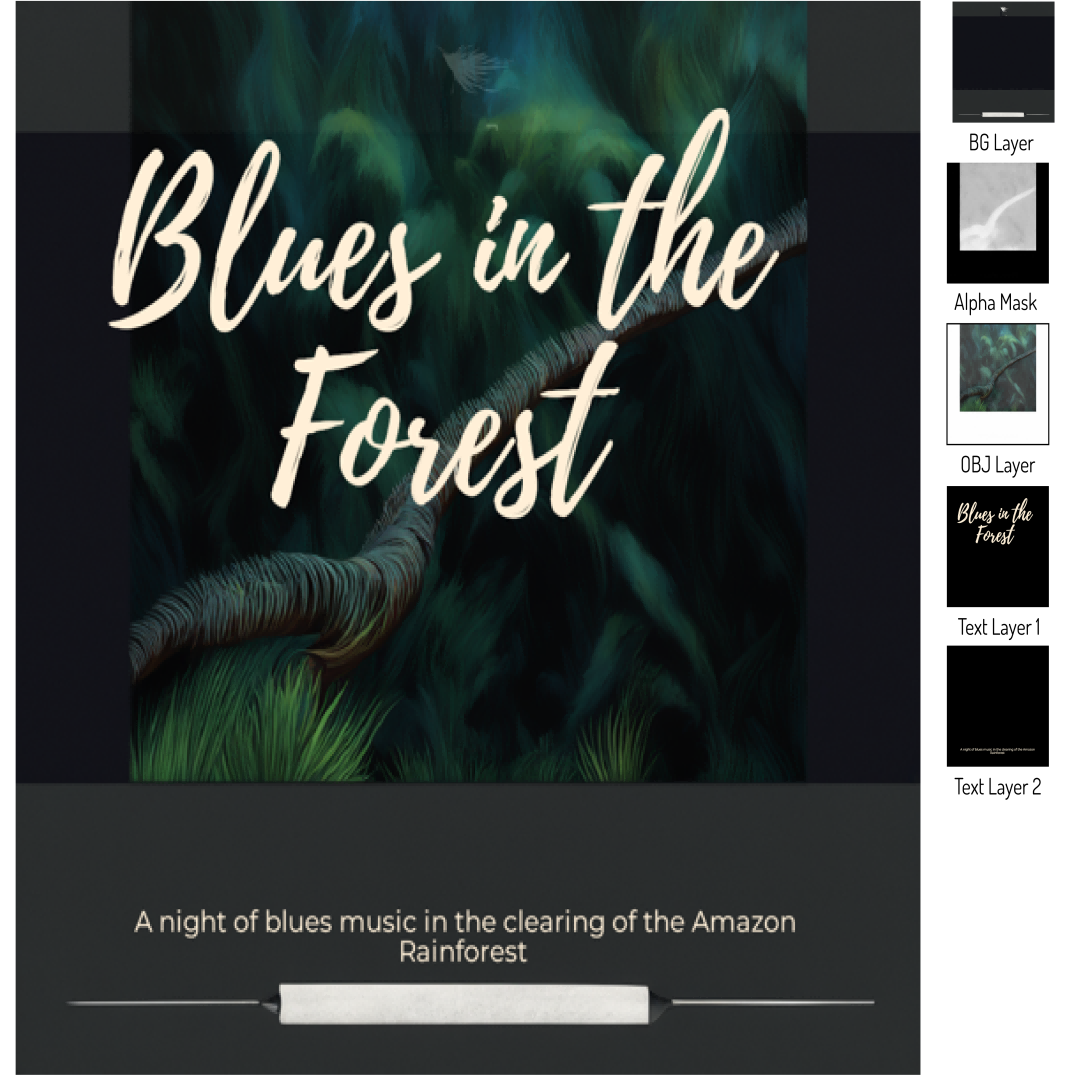

Design an alluring poster for a blues concert in a clearing of the Amazon Rainforest. (...).

Use a moody, midnight blue palette and incorporate

silhouettes of blues instruments and nocturnal wildlife.

Design an advertisement card to promote the sale of premium quality school equipment, with a focus on enhancing the

learning experience for students.

Create a business card for a travel agency that offers vacation planning services. The design features a red suitcase to represent travel. The contact information includes phone number +123-456-7890 and website www.yourwebsite.com.

Create a poster advertising a corner spice shop with a wide range of spices and ingredients.

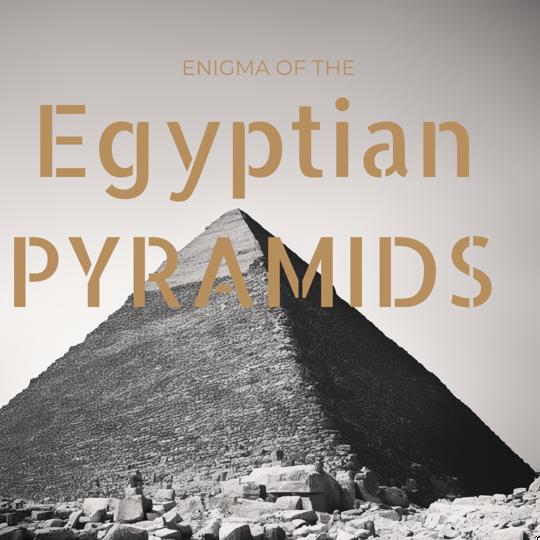

Create a captivating poster for an upcoming documentary about the mysteries of the Egyptian pyramids. (...). Use a muted color palette to imitate the sands of Egypt and the age-old structures.

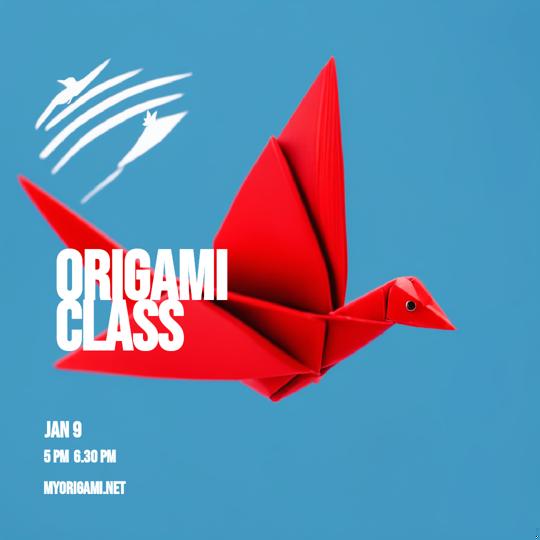

Create an invitation card for an origami class that teaches the attendees how to make flowers and animals. The class is from 5 PM to 6.30 PM on Jan 9th. More information is available on the website myorigami.net.

Design a festive Christmas greeting card with a cute and playful illustration that features a winter scene with trees, snowflakes and a festive greeting. The card is part of the HelloChristmas series and features a red and white color scheme.

Design an elegant invitation for a wedding dinner rehearsal, with a romantic and festive feel. The event date is August 10, 2022 and the names are Dana and Alan.

Create a Valentine's Day greeting card with a romantic and playful design that expresses love. The text 'Happy Valentine's Day' and 'I Love You' are included.

Design a thank you card with a floral theme and a message expressing gratitude. The card is intended to be used for business purposes and features the company logo.

Design a premium and high quality emblem for a coffee house that emphasizes on the freshness and aroma of the coffee served. The text 'Coffee & More' is included to show that there is more to offer than just coffee.

Design a poster to promote a charity bike ride event to raise funds and support for a humanitarian cause. The event will be held on June 26th and the website for registration and donation is www.funbike.com.

Promote camping equipment for adventurous couples who enjoy spending time in nature and camping. Encourage them to order now through the ad on Instagram.